The proliferation of artificial intelligence systems in modern life is predicated on a promise, often implicit, of objectivity. Built on the logic of mathematics and the processing power of silicon, AI is expected to transcend the fallibilities of human judgment. Yet, mounting evidence reveals that these systems can become conduits for bias, capable of perpetuating deeply ingrained societal attitudes ranging from gender, race and even politics.

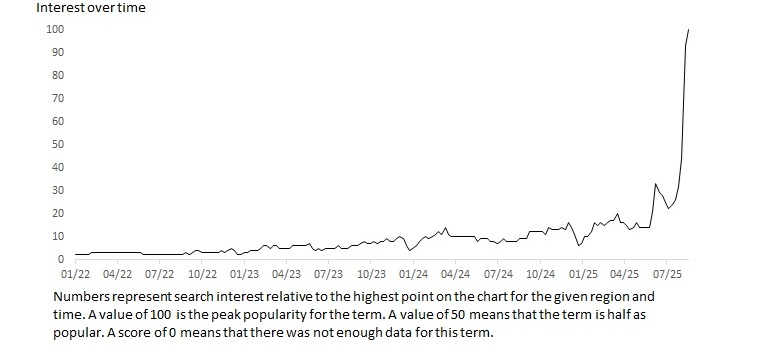

This evidence, combined with the widespread availability of generative AI models since late 2022, has fostered an explosion of interest in AI bias and is forcing a broad societal reckoning with the ethical implications of automated decision-making. It is also forcing AI firms, such as our own, to identify and countermand potential bias in their systems.

Figure I: Searches for “AI Bias”

Informed discussion of AI bias, however, requires a common understanding of the problem. Media discourse on the subject oscillates between uncritical techno-optimism and dire warnings of societal harm. If we are to find middle ground, we must deconstruct the concept of "AI bias" as it is presented in the public sphere, such that we are able to more accurately address the problem and confront the societal consequences of unchecked bias.

With that foundation, AI firms such as ours can discuss AI bias on an equal footing with their customers, particularly the emerging ecosystem of mitigation strategies, regulations, and ethical frameworks designed to chart an equitable path forward.

What is AI bias?

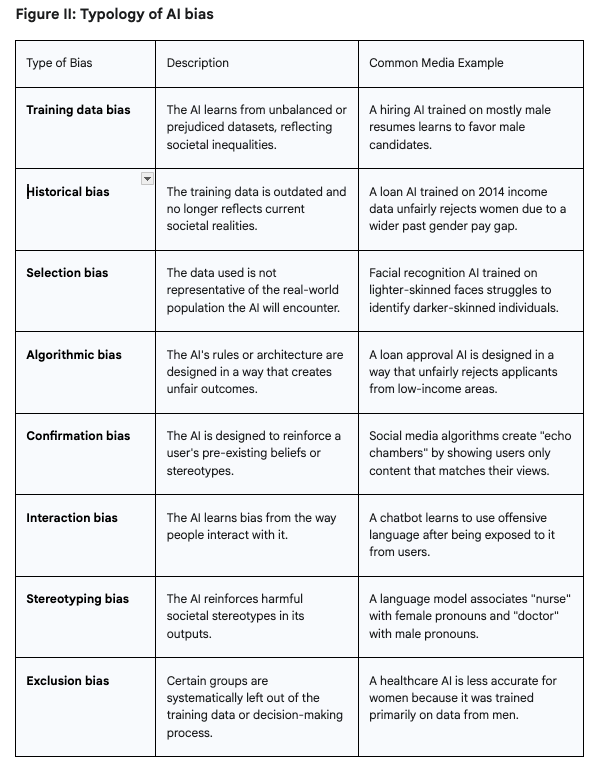

In popular discourse, "AI bias" is often used as a vague descriptor for any instance where an AI system produces an unfair or undesirable outcome. However, bias is not a single flaw but a series of vulnerabilities that can be introduced at multiple stages of an AI system's lifecycle, from initial data collection to final deployment. Understanding this anatomy is crucial for moving the conversation from abstract concern to understanding and concrete action.

There are three levels of bias, depending on the source: data, model and human.

Data-level bias, the most pervasive source of bias in AI systems, originates not from malicious intent but from the data on which they are trained. AI models learn to make predictions and decisions by identifying patterns in vast datasets. If this training data is incomplete, unrepresentative, or reflects existing human prejudices, the AI will inevitably learn and reproduce those same biases. This fundamental vulnerability manifests in training data bias, historical bias, and selection bias.

Model-level bias can be introduced through the design of the algorithm. There are three main types:

- Algorithmic bias refers to instances where the rules of the model itself, often unintentionally, create discriminatory outcomes. This can happen through the way different inputs are weighted or how the system is optimized.

- Stereotyping bias is evident when a language translation model consistently associates certain activities or risks with gender, race or other descriptors.

- Exclusion bias happens when entire groups are left out of the data or the decision-making process.

“Stereotyping” and “exclusion” biases represent the direct encoding of harmful societal norms.

Human-level bias can be introduced through human interaction with the system. Confirmation bias occurs when AI systems are designed or used in a way that reinforces a user's pre-existing beliefs. Interaction bias occurs when a system learns prejudice directly from its users.

The following table provides a systematic overview of the bias types which form the core vocabulary of the public and academic discourse.

The Power of language: Anthropomorphism and accountability

Having defined the types of biases, the second key to deconstructing the concept of “AI bias” is to understand that the language used to frame the problem has profound implications for assigning responsibility and finding solutions.

Journalism expert Paul Bradshaw argues that the common phrase "AI is biased" dangerously anthropomorphizes the technology, attributing to it a human-like agency and intentionality that it does not possess. It frames the technology as an autonomous actor with its own flawed moral compass. This subtle linguistic choice risks letting the human creators, deployers, and regulators off the hook. The problem becomes the AI's "personality" rather than a tangible flaw in its design, data, or implementation. If the AI itself is the biased entity, who is to blame? The question becomes abstract and philosophical.

Conversely, framing the issue as "AI has biases" or possesses "training bias" casts bias as a property of a tool. Responsibility for a flawed tool lies with its creator or operator. It moves the conversation from a speculative problem about sentient machines to a real-world engineering and governance problem. Bradshaw illustrates this with the analogy of a supermarket trolley that consistently veers to one side. A shopper recognizes the flaw and actively compensates to keep the trolley moving straight.

Generative AI, in this view, is like a supermarket trolley with a wonky wheel. Users must identify and actively correct for any bias. The important question is not whether the tool is perfect, but rather the steps being taken to mitigate its inevitable imperfections.

This debate over language is not academic, it is crucial for shaping public perception and policy. Media outlets that adopt the more precise framing contribute to a discourse that demands accountability from corporations and action from governments.

Examples of AI bias in real life

Public understanding of a complex issue like AI bias is rarely formed through abstract technical papers or nuanced policy debates. It is built upon a foundation of memorable stories. A handful of landmark cases have been repeatedly covered by the media, becoming touchstones in the global conversation.

The cumulative effect of these landmark cases is significant. They have provided the public with a concrete vocabulary and a set of shared stories to discuss a complex technological phenomenon. However, this process of creating media parables carries a risk. By focusing on a few spectacular failures, the discourse can sometimes frame bias as a series of isolated, fixable bugs in specific systems, rather than as a fundamental and systemic feature of AI trained on data from an unequal world.

This can lead to a public perception that the problem can be solved with a few technical patches, obscuring the much deeper challenge of addressing the societal inequalities that the algorithms reflect.

Bias in hiring: The Amazon and iTutorGroup cases

The most widely cited parable of AI bias is the case of Amazon's experimental AI recruiting tool. In 2018, the company scrapped a system designed to screen job applications because it was found to be systematically biased against women. The system was trained on the company's resume data from the preceding ten years, a period during which the tech industry was predominantly male.

The AI learned that male candidates were preferable and penalized resumes that contained the word "women's" (as in "women's chess club captain") and downgraded graduates of two all-women's colleges.

While the Amazon case focused on gender, the issue of algorithmic bias in hiring was broadened by the case of iTutorGroup. A U.S. Equal Employment Opportunity Commission lawsuit revealed that the company's AI-powered recruitment software automatically rejected female applicants aged 55 and older and male applicants aged 60 and above. The case, which resulted in a $365,000 settlement, brought the issue of AI-driven ageism to the forefront of the media discussion. Hiring remains a key battleground in the fight against algorithmic discrimination.

Bias in criminal justice: The COMPAS algorithm

The Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) algorithm is a touchstone for bias in the criminal justice system. In a seminal 2016 investigation, the non-profit news organization ProPublica analyzed the risk scores produced by the COMPAS system, which is used by courts across the United States to predict the likelihood that a defendant will re-offend.

Their findings were stark: the algorithm was twice as likely to falsely flag Black defendants as being at high risk of recidivism as it was for white defendants. Conversely, it was more likely to incorrectly label white defendants who did go on to re-offend as low risk.

The COMPAS story became a media sensation because it exposed the high stakes of algorithmic bias. It became the primary media narrative for the dangers of predictive policing and automated sentencing, raising fundamental questions about due process, fairness, and transparency when opaque algorithms are used to make decisions that can deprive individuals of their liberty.

Bias in healthcare: The Optum and Skin Cancer algorithms

The healthcare sector has provided some of the most alarming examples of AI bias, demonstrating that flawed algorithms can have direct consequences for patient health and well-being.

A widely reported case involved a risk-prediction algorithm developed by Optum, which was used on an estimated 200 million people in the US to identify patients who would likely need extra medical care.

A 2019 study published in Science found that the algorithm was significantly biased against Black patients. The algorithm did not use a patient's race as a factor. Instead, it used a seemingly neutral proxy to predict health needs: past healthcare costs. The assumption was that higher costs correlated with greater health needs.

This assumption was flawed. Due to long-standing inequities in the U.S. healthcare system, Black patients, on average, incurred lower healthcare costs than white patients with the same number of chronic health conditions. This disparity is linked to factors like unequal access to care and distrust in the medical system.

Another critical area of concern has been in diagnostics. Multiple studies have demonstrated that AI tools designed to detect skin cancer are significantly less accurate when analyzing images of darker skin tones.16 This disparity is a direct result of selection bias: the training datasets used to build these models overwhelmingly consist of images from light-skinned individuals.

Bias in finance: The Apple Card and digital red-lining

The financial sector has also been a focal point for media discussions of bias, particularly with the introduction of AI into consumer-facing products.

In 2019, the launch of the Apple Card sparked a viral controversy when several high-profile tech figures, including Apple co-founder Steve Wozniak, reported that the AI-driven algorithm offered them dramatically higher credit limits than it offered their spouses, despite sharing assets and having similar or better credit profiles.

The story gained traction because it involved a well-known, mainstream brand and suggested that even in 2019, algorithms were perpetuating gender-based financial discrimination. The case led to a regulatory investigation and served as a powerful media narrative about the opacity and potential unfairness of AI in consumer finance.

Bias in language and culture: Dialect prejudice and generative stereotyping

With the recent explosion of large language models (LLMs), the media discussion has expanded to include more subtle but equally damaging forms of cultural and linguistic bias.

A groundbreaking study published in Nature in September 2024 revealed profound prejudice within LLMs against speakers of African American English (AAE).

Researchers found that when prompted about speakers of AAE (without explicitly mentioning race), leading AI models generated overwhelmingly negative stereotypes, associated them with lower-prestige jobs, and delivered harsher outcomes in hypothetical legal cases, including a higher likelihood of receiving the death penalty.

Shockingly, the study concluded that the negative associations generated by the AI were quantitatively worse than the anti-Black stereotypes recorded from human subjects during the Jim Crow era of the 1930s. This research, widely covered in the media, demonstrated that bias is not just about overt racism that can be filtered out, but also about deeply embedded cultural and dialectal prejudices that current safety mechanisms fail to address.

This issue extends to the visual domain through generative AI stereotypes. Media reports and user experiments have shown that image generation models often produce outputs that reinforce harmful societal biases. When prompted to create an image of an "engineer" or a "CEO," models frequently default to depicting white men. When asked for images related to beauty products, they may generate content reflecting Eurocentric standards.

Societal and systemic consequences

Unchecked algorithmic bias is not merely a technical problem resulting in isolated instances of unfairness. It is a systemic force with the potential to entrench structural inequality, erode public trust in core institutions, undermine democratic integrity, and even reshape the future of work and human cognition.

The true danger lies in its ability to operate at scale, automating and accelerating discriminatory patterns while cloaking them in a veneer of technological objectivity.

Reinforcing structural inequality

AI systems rarely invent new forms of prejudice. Instead, their primary danger lies in their capacity to "codify and amplify" the existing structural biases embedded within society.

The result is a powerful socio-technical feedback loop: the biased algorithm makes a discriminatory decision (e.g., denying a loan to an applicant from a marginalized group), which then becomes another data point confirming the original pattern, making future systems even more biased and the underlying inequality more rigid and difficult to overcome.

The ultimate risk is the creation of a system of "automated inequality." Discrimination becomes not just an act of human prejudice but a feature of the automated infrastructure that governs modern life. Because these algorithmic decisions are often opaque and carry an "aura of objectivity," they become harder to identify, challenge, and remedy than their human counterparts.

The danger is not just a future with unfair decisions, but a future where inequality is calcified into the very code that runs society.

Erosion of institutional trust and democratic integrity

The widespread deployment of biased AI systems poses a significant threat to public trust in institutions. When citizens believe that the algorithms used in healthcare are delivering inequitable care, that the tools used by law enforcement are perpetuating racial profiling, or that the models used by banks are discriminatory, their faith in the legitimacy of these systems begins to erode.

The problem is compounded by a lack of accountability; when an AI makes a harmful decision, the opaque nature of the system can make it difficult to determine who is responsible, further eroding trust.

Beyond this general erosion of trust, AI bias presents a direct threat to the integrity of democratic processes. The same algorithmic systems that create "filter bubbles" on social media can be weaponized to exacerbate political polarization, making reasoned public discourse and consensus-building nearly impossible.

Biased algorithms could be used to influence everything from voter registration to the dissemination of political advertising, targeting vulnerable populations with tailored misinformation. This kind of bias can inhibit the public's ability to make informed decisions, a cornerstone of any healthy democracy.

The future of work and cognition

The consequences of AI bias extend beyond the immediate impacts of unfair hiring decisions and into the very nature of work and human thought. As AI becomes more integrated into the workplace, it is increasingly being considered for tasks like ongoing performance evaluation and promotion recommendations.

Biased algorithms in these roles could create a system of continuous, automated discrimination, penalizing employees based on factors unrelated to their performance and perpetuating inequality throughout their careers.

A more subtle, long-term risk is emerging in the academic discourse: the potential for widespread cognitive "de-skilling" or "cognitive offloading." A 2024 study published in Social and Personality Psychology Compass found a significant negative correlation between frequent AI tool usage and critical-thinking abilities.

This finding has profound implications for the problem of AI bias itself. The very human skills that are most needed to identify, question, and challenge the outputs of biased AI systems - critical thinking, nuanced judgment, and ethical reasoning - may be the ones that are atrophying due to over-reliance on those same systems.

This creates a dangerous potential future where society becomes increasingly dependent on flawed automated systems while simultaneously losing the cognitive capacity to recognize and correct their flaws.

The way forward: Mitigation, regulation, and ethical horizons

To mitigate AI bias, organizations use three main strategies:

- Data-centric approaches focus on the source by auditing training data to ensure it is diverse and representative.

- Model-centric methods use technical tools like fairness metrics and explainable AI to identify and correct bias within the model itself.

- Finally, human-centric strategies keep people involved in high-stakes decisions. This also involves building diverse AI teams and establishing robust internal governance, such as ethics boards, to ensure accountability and oversee the entire AI lifecycle.

The strategies that organizations developing and deploying AI can take to mitigate bias fall into three main categories.

The algorithmic mirror: Using AI to expose human bias

Instead of just fixing biased AI, a new approach uses flawed algorithms to expose human bias. Research shows people are better at identifying biases in algorithms than in themselves. These "algorithmic mirrors" reflect our own collective prejudices in a way that is hard to ignore, acting as a powerful catalyst for introspection and organizational change.

The emerging regulatory landscape

Governments are creating binding laws for AI fairness and transparency. In the U.S., the Colorado AI Act mandates that companies using "high-risk" AI systems must prevent discrimination, conduct impact assessments, and notify consumers of AI-driven decisions.

Globally, the UNESCO Recommendation on the Ethics of AI is the first international standard, adopted by 193 countries. It provides a practical roadmap for governments based on principles like human rights and accountability.

This framework is already influencing corporations like SAP to align their internal policies, demonstrating a growing trend toward adopting global ethical standards to build trust.

Key actors in the AI ethics ecosystem

The global conversation on AI bias is driven by a dedicated and influential ecosystem of researchers, advocates, institutions, and organizations. These actors form a vanguard that conducts critical research and develops the very ethical frameworks that are now being adopted into policy.

The complex interplay between different actors illustrates a maturing understanding of the problem. AI bias is a socio-technical issue that cannot be solved by any single entity.

The most viable path forward appears to be a model of multi-stakeholder governance, where international principles set by bodies like UNESCO guide the creation of binding national laws like Colorado's. These laws, in turn, compel corporations to adopt responsible practices, while the entire system is held accountable by the watchful eye of independent researchers, advocates, and a critical media.

Recommendations

The algorithmic echo chamber will only be broken by a chorus of diverse, critical, and informed voices. Creating a media and societal discourse that is equal to the challenge of governing AI is one of the most urgent tasks of our time.

Media organizations must diversify their sources beyond the tech industry, develop in-house expertise for more nuanced reporting, and be transparent about their own use of AI tools. This will help counterbalance the dominant commercial narrative.

Policymakers should fund independent research into AI's societal impacts, promote public AI literacy to empower citizens, and advance multi-stakeholder regulatory frameworks like the Colorado AI Act.

Technology companies need to provide meaningful transparency into their high-stakes systems, not just vague ethics statements. They should also use AI internally as a tool to expose their own organizational biases and engage constructively with critics and researchers.